Importance of AI in Moderation Solution

Moderation presents an increasingly complex challenge to fair and neutral management of digital content. It is not a secret that human moderators are human beings and consequently can introduce bias unconsciously due to their personal beliefs and due to societal standards and norms. The relatively new promise of AI is a structured solution to moderating content. An MIT study found that AI is able to locate offensive material 85% of the time, with the human average of 72%.

What Makes AI Better Than Human Moderation

This would be good because, in contrast to human code validators, the AI tools will have no adverse (or harmful) response as a consequence of tiredness or emotions. This results in much more consistent moderation of content decisions. The largest social platforms cite implementing AI has reduced the time it takes to detect and act upon harmful content from hours to minutes, doubling response times by 50%

Improving equity in by means of Algorithmic education

AI systems are trained with diverse datasets to reduce bias, which are representative of different demographics and opinions. In other words, developers are hard at work to make sure their algorithms can pick up on cultural and linguistic nuance so that their AI has a balanced view. Studies concerning the mainstream datasets found that AI with bias behaviours trained on diversified datasets can diminish the accuracy of content flagging up to 30%.

Problems with AI Moderation

AI systems will certainly play a role in reducing some types of human bias, but it will never be entirely bias-free. When AI is trained on biased data, this means these biases can be reflected in its moderation decisions as well. AI models must be continuously audited and updated on an ongoing basis to avoid replicating historical biases.

Incorporating Human Oversight

To provide this equilibrium many organizations follow a hybrid model where AI does most of the moderation but humans engage only when some decisions are complex, mostly those which require a contextual judgment. It takes advantage of AI's productivity, while also providing human compassion and insight to high-stakes and ambiguous cases. According to Stats, using this hybrid moderation can increase accuracy up to 95%!!

Ethics and Transparency

Building ethical AI for content moderation is imperative. Increased transparency with how AI models work, how decisions are reached, etc., is necessary for companies to establish user trust with their models. These include clear guidelines and accountability standards guaranteeing that AI applications will be used safely.

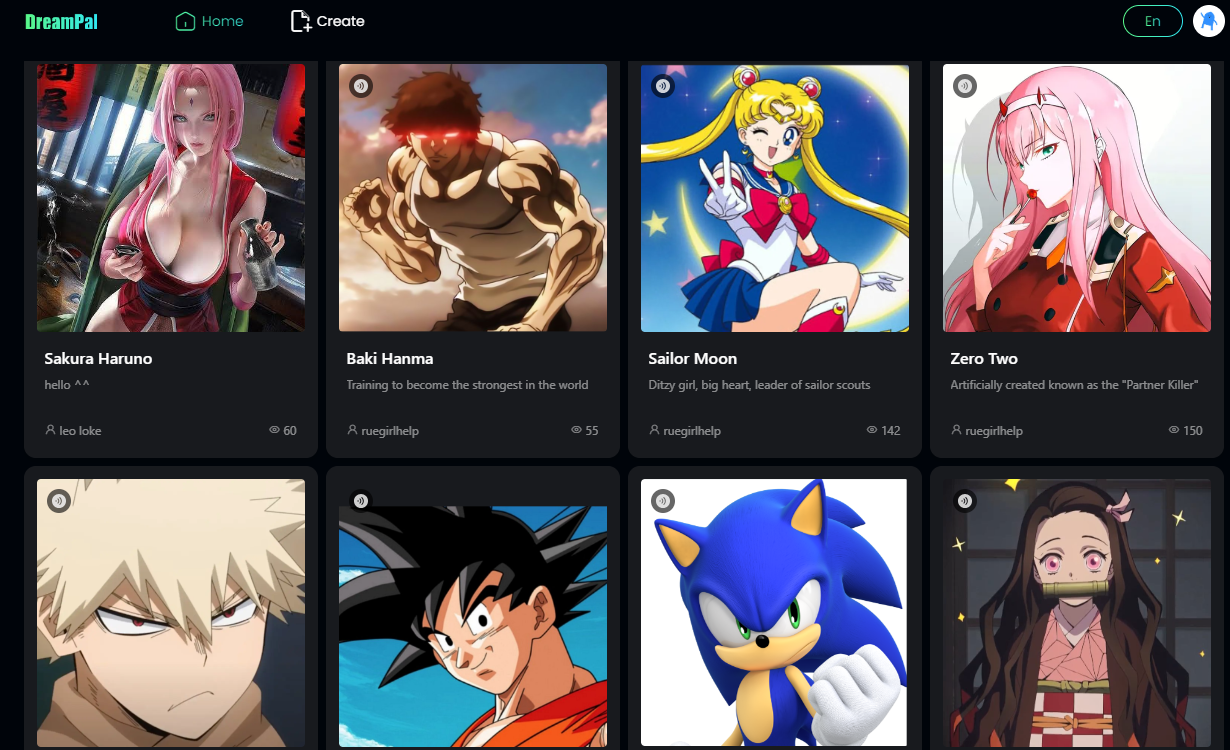

Digging into specific resources can illustrate how AI in general, and nsfw ai in particular, is used to orchestrate fairer content moderation, providing a clearer picture of how well and under what circumstances these tech can build up fairer digital spaces.